The DataDigger is over 40,000 lines of code. Inside are some real treasures, so I will dissect the DataDigger, to reveal them. Today: caching. Again.

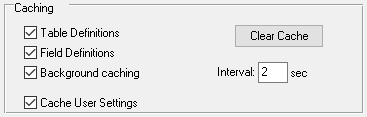

The DataDigger remembers a lot. That may be something that goes by largely unnoticed, but things like the window size, its position, what table you selected, which fields you hid or what filters you used, all of it is remembered in the settings file. But there is more, because the settings you use in the settings screen are saved in the ini file as well. These settings are saved and restored when you start DataDigger again. But what is going on behind the scenes?

Settings can be saved on disk in a number of ways. For DataDigger I decided that I wanted to use a common format for the settings and I chose the ini file format as used by Windows. This is a fairly readable format and allows for external tools to edit the file, should it be necessary. Other possible formats would have been an xml file, a json file or a proprietary format, but I settled on a simple structure:

DEFINE TEMP-TABLE ttConfig NO-UNDO

FIELD cSection AS CHARACTER

FIELD cSetting AS CHARACTER

FIELD cValue AS CHARACTER

INDEX idxPrim IS PRIMARY cSection cSetting.

Populating it is straightforward:

PROCEDURE readConfigFile :

DEFINE INPUT PARAMETER pcConfigFile AS CHARACTER NO-UNDO.

DEFINE VARIABLE cSection AS CHARACTER NO-UNDO.

DEFINE VARIABLE cLine AS CHARACTER NO-UNDO.

INPUT FROM VALUE(pcConfigFile).

REPEAT:

IMPORT UNFORMATTED cLine.

IF cLine MATCHES "[*]" THEN cSection = TRIM(cLine,"[]").

IF NUM-ENTRIES(cLine,'=') = 2 THEN

DO:

FIND ttConfig

WHERE ttConfig.cSection = cSection

AND ttConfig.cSetting = ENTRY(1,cLine,"=") NO-ERROR.

IF NOT AVAILABLE ttConfig THEN

DO:

CREATE ttConfig.

ASSIGN

ttConfig.cSection = cSection

ttConfig.cSetting = ENTRY(1,cLine,"=").

END.

ttConfig.cValue = ENTRY(2,cLine,"=").

END.

END.

INPUT CLOSE.

END PROCEDURE. /* readConfigFile */

Note that this is a simplified version of what is used in DataDigger. No buffers are used (you really should use buffers, like: always) and no edge cases are handled here.

DataDigger’s INI files

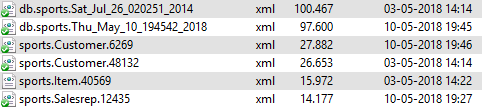

DataDigger uses 3 different .ini files. One is for DataDigger itself; its primary task is to save time stamps of the source files. On startup, the time stamps of the current files in the DataDigger folder are compared to those in the .ini file and based on that, DataDigger decides whether or not to recompile itself.

The second file is for the help messages. In hindsight, these could have been put in the primary .ini file, but in the early days of DataDigger I thought it would be handy to have them in a separate file.

The last one is the user-specific .ini file for the settings of the user. The .ini file is appended with the login name of the user so each user will have his own settings file. In this file all settings are saved that are a result of the user’s actions.

This last one is the one that gets most read and write actions. When I introduced the settings file, this was a nice feature to save and restore user settings, but as DataDigger developed, more and more ended up in the settings file and eventually, reading and writing became noticeable (read: slow).

Settings, version 1

The very first version was one that read its settings straight from the INI file itself, using GET-KEY-VALUE and PUT-KEY-VALUE. The temp-table as shown above was not yet used. Although straightforward, it was slow, so I quickly moved on to plan B.

Settings, version 2

Plan B was called “Hello Caching”. At the beginning of the session, I read the .ini file into ttConfig and served all settings from there. Saving was done at the end of the session. This worked way better than the previous solution, but a problem arose when your session crashed prematurely, because your settings would not be saved. This was not the only problem, because when you had two windows active at the same moment, the settings would get out of sync very easy.

Settings, version 3

Enter version 3. The settings needed to be saved when changed, so I read them on startup, but saved them to disk whenever they changed, so data was saved when the session would crash. The temp-table was moved to the persistent library, so when running multiple windows, the settings would remain in sync.

At this point, the code to get/set the config basically boils down to:

FUNCTION getRegistry RETURNS CHARACTER

( pcSection AS CHARACTER

, pcKey AS CHARACTER ) :

FIND ttConfig

WHERE ttConfig.cSection = pcSection

AND ttConfig.cSetting = pcKey NO-ERROR.

RETURN ( IF AVAILABLE ttConfig THEN ttConfig.cValue ELSE ? ).

END FUNCTION. /* getRegistry */

FUNCTION setRegistry RETURNS CHARACTER

( pcSection AS CHARACTER

, pcKey AS CHARACTER

, pcValue AS CHARACTER ) :

FIND ttConfig

WHERE ttConfig.cSection = pcSection

AND ttConfig.cSetting = pcKey NO-ERROR.

IF NOT AVAILABLE ttConfig THEN DO:

CREATE ttConfig.

ASSIGN ttConfig.cSection = pcSection

ttConfig.cSetting = pcKey.

END.

IF pcValue = ? OR pcValue = ''

THEN DELETE ttConfig.

ELSE ttConfig.cValue = pcValue.

RETURN "".

END FUNCTION. /* setRegistry */

Again: stripped of buffers and edge cases

This solution has been used pretty long in DataDigger, but since more and more got saved into the settings file, the writing process became a problem, so I needed to fix that. The answer to this was delayed writing to disk. Writing to disk involves some serious overhead where it does not really matter if you are writing one setting to disk or hundred. The extra time involved is a matter of milliseconds; saving 100 settings to disk one-by-one takes approximately 80 msec. Saving 100 settings in one pass takes 3 msec.

Settings, version 4

First, we add a new field to ttConfig to indicate the value has changed.

DEFINE TEMP-TABLE ttConfig NO-UNDO

FIELD cSection AS CHARACTER

FIELD cSetting AS CHARACTER

FIELD cValue AS CHARACTER

FIELD lDirty AS LOGICAL

INDEX idxPrim IS PRIMARY cSection cSetting.

This field – lDirty – will be set to TRUE whenever we change a value in the table. So, the function setRegistry is changed to this:

FUNCTION setRegistry RETURNS CHARACTER

( pcSection AS CHARACTER

, pcKey AS CHARACTER

, pcValue AS CHARACTER ) :

FIND ttConfig

WHERE ttConfig.cSection = pcSection

AND ttConfig.cSetting = pcKey NO-ERROR.

IF NOT AVAILABLE ttConfig THEN DO:

CREATE ttConfig.

ASSIGN ttConfig.cSection = pcSection

ttConfig.cSetting = pcKey.

END.

IF pcValue = ? OR pcValue = ''

THEN DELETE ttConfig.

ELSE ASSIGN ttConfig.cValue = pcValue

ttConfig.lDirty = TRUE.

RETURN "".

END FUNCTION. /* setRegistry */

As you can see, only one extra line of code. Now, we add a timer (check my post ‘Turn timers into a scheduler‘ on how to do that) and let it periodically check whether there is anything to save:

IF CAN-FIND(FIRST ttConfig WHERE ttConfig.lDirty = TRUE) THEN

DO:

OUTPUT TO VALUE(cConfigFile).

FOR EACH ttConfig BREAK BY ttConfig.cSection:

ttConfig.lDirty = FALSE.

IF FIRST-OF(ttConfig.cSection) THEN

PUT UNFORMATTED

SUBSTITUTE("[&1]",ttConfig.cSection) SKIP.

PUT UNFORMATTED

SUBSTITUTE("&1=&2",ttConfig.cSetting, ttConfig.cValue) SKIP.

END.

OUTPUT CLOSE.

END.

This timer is executed every 5 seconds and on window-close, to make sure that even the last few settings are saved.

Caveat

One last warning: the code above is not literally from the DataDigger. If you explore the code on GitHub (go ahead, it’s open) you will see that the code there is much longer, uses buffers and handles a lot of edge cases. I left a lot of that code out to make the code more readable. If you decide to implement settings in your application similar to what is described above, you should probably check the real code as well.

![spgTHgA[1]](https://datadigger.files.wordpress.com/2019/12/spgthga1.png)

![ox2ywSK[1]](https://datadigger.files.wordpress.com/2019/12/ox2ywsk1.png)